Hey there, I’m Devansh. I write for an audience of ~200K readers weekly. My goal is to help readers understand the most important ideas in AI and Tech from all important angles- social, economic, and technical. You can find my primary publication AI Made Simple over here, message me on LinkedIn, or reach out to me through any of my social media over here. I work as a consultant for clients looking to integrate AI in their lives- so please feel free to reach out if you think we can work together.

Executive Highlights (tl;dr of the article)

Teams everywhere are concerned about how to integrate AI into their workflows most effectively. If your organization has lots of money to burn, you could pay McKinsey consultants 400 USD/hour to create pretty slides based on recommendations from ChatGPT and spend 5 hours weekly in meetings to explore ‘synergies’ and ‘best practices’. But for those of you without that luxury, one of the best resources is to look at the research done by the productivity teams at major companies. These companies have dedicated teams that interview their employees, study workflows, and extract insights from various internal and external experiments conducted on productivity.

Today, we will be looking at Microsoft’s excellent “Microsoft New Future of Work Report 2023” to answer a key question- how can we leverage AI to make our work more productive? We will be studying the report brings to pull out interesting insights on-

1. How LLMs impact Information Work:

LLMs can significantly boost productivity for information workers by automating tasks like writing, content creation, and information retrieval. Studies show people complete tasks up to 73% faster and produce higher-quality output with LLM-based tools.

However, these tools also require careful evaluation and adaptation. While LLMs can be incredibly fast, their accuracy is not always guaranteed, and users need guidance on navigating the trade-offs between speed and correctness. There is also a high degree of overreliance, and users have to actively be cautioned against using AI outputs without verification.

Interestingly, AI seems to help the low performers a lot more than the studs. This likely has a lot to do with the way LLMs can democratize knowledge- and tell people what to do.

“In a lab experiment, participants who scored poorly on their first writing task

improved more when given access to ChatGPT than those with high scores on the

initial task.

• Peng et al. (2023) also found suggestive evidence that GitHub Copilot was more

helpful to developers with less experience.

• In an experiment with BCG employees completing a consulting task, the bottom-

half of subjects in terms of skills benefited the most, showing a 43% improvement

in performance, compared to the top half whose performance increased by 17%

(Dell’Acqua et al. 2023).”

I would take these results with a grain of salt, however. High-skill performers often do different things to their low-skilled counterparts, something that standardized tests are unable to measure. League 2 player Erling Haaland is a better footballer than me not just because he can beat me on performance-related tests, but also because he does 30 things that I don’t. These 30 things are often much more difficult to measure. As we figure out how to use AI more effectively (and how to measure the results better), AI might actually increase the performance disparity between skilled and unskilled workers (most technology tends to reinforce differences, not reduce them). We already see some signs of this.

2. LLMs and Critical Thinking:

Instead of solely acting as assistants, LLMs can serve as "provocateurs" in knowledge work, challenging assumptions and encouraging critical thinking.

This approach can be particularly valuable for breaking down complex tasks, facilitating microproductivity by allowing individuals to focus on their strengths. The balance of skills required for work will shift, emphasizing critical analysis and integration of AI-generated content.

The way I see it, instead of using AI to try and come up with completely new/revolutionary micro-tasks, your best bet is to use it to ensure that your basics are covered. This frees up your cognitive space to think about the revolutionary space yourself. Done right- this can also be used to implement a high degree of standardization to our workflows: which can both enable future AI/data-driven projects and improve collaboration in your team by ensuring everyone is playing with a similar set of rules.

3. On Human-AI Collaboration:

Effective human-AI collaboration hinges on understanding how to prompt, complement, rely on, and audit LLMs. Prompt engineering plays a crucial role in generating desired outputs, but it remains challenging to consistently construct effective prompts. Research is actively improving LLM instruction compliance, and tools are being developed to assist users in crafting better prompts.

Overreliance on AI can be detrimental, leading to poorer performance than either humans or AI acting alone. To mitigate this, AI systems need to be designed to promote appropriate reliance through features like transparency, uncertainty visualization, and co-audit tools.

4. LLMs for Team Collaboration and Communication:

LLMs can enhance team collaboration and communication by providing real-time and retrospective feedback on meeting dynamics. This feedback can encourage more equal participation and agreement, but it should be tailored to specific teams and delivered in a way that avoids cognitive overload. There is also a concern with AI not being able to adapt to neuro-divergent folk, or understanding the expressions of people from different cultures. This is why you shouldn’t use purely automated systems to make important judgments about people.

LLMs can also help teams plan and iterate on workflows by tracking task interdependence, allocating roles effectively, and identifying potential bottlenecks.

5. Knowledge Management and Organizational Changes:

LLMs have the potential to address the long-standing problem of knowledge fragmentation in organizations by drawing on information from diverse sources and presenting it in a unified manner.

This can help eliminate knowledge silos and enable users to access information more efficiently, but it also raises ethical considerations related to data privacy and access control.

6. Implications for Future Work and Society:

The introduction of AI into workplaces is a sociotechnical process, with the potential to both enhance and disrupt the nature of work.

Addressing adoption disparities and fostering innovation are crucial to ensure that AI benefits everyone.

Leadership will need to adapt to the changing landscape, embracing a scientific approach to experimentation, learning, and sharing insights.

The future of work is not predetermined; it is a choice that we have the power to shape. There are no true experts and we’re all trying to figure things out. Your best bet is to iterate quickly, think deeply about your experiments, and keep learning.

We'll spend the rest of this article discussing these ideas in more detail. Let’s get right into it.

An in-depth look at the impact of LLMs on Information Work

The following image summarizes the key themes very well-

Generative AI makes a clear, undeniable contribution to reducing the cognitive load from repetitive work, significantly improving experience- “68% of respondents agreed that Copilot actually improved quality of their work…participants with access to Copilot found the task to be 58% less draining than participants without access…Among enterprise Copilot users, 72% agreed that Copilot helped them spend less mental effort on mundane or repetitive tasks.”

The impacts on quality are a bit more diverse. In a meeting summarization study, we see a slight reduction in performance, “in the meeting summarization study where Copilot users took much less time, their summaries included 11.1 out of 15 specific pieces of information in the assessment rubric versus the 12.4 of 15 for users who did not have access to Copilot.” This is not a super-significant difference but it definitely highlights the importance of having a human in the loop to audit the generation. In this sense, it seems like LLMs can be very helpful in creating a good first draft very quickly- leaving the refinement and improvements to the user (something 85% of the respondents agreed to).

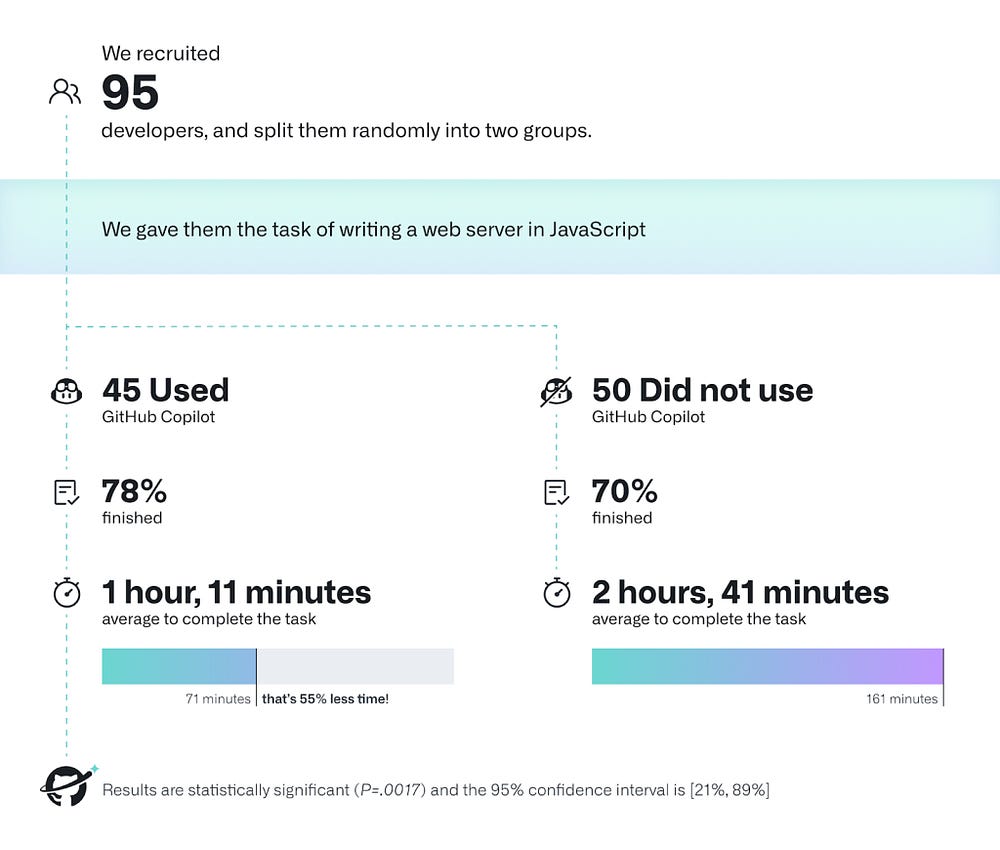

On more domain-specific tasks, LLMs can introduce a very noob-friendly meta by raising the performance floor- “In the other direction, the study of M365 Defender Security Copilot found security novices with Copilot were 44% more accurate in answering questions about the security incidents they examined.” You can see something similar for yourself- with tools like DALLE that allow anyone to make good images. This is what leads to the impression that AI can help replace experts in their respective fields. For example, the usage of Github Copilot leads to a significantly better performance for programmers-

However, the reality is a lot more complicated. While such tools can be very helpful- they also introduce all kinds of unpredictable errors and vulnerabilities in systems. This is where Domain Expertise is key, since it will help you evaluate and modify the base output to your needs (the first draft concept shows up again). The most effective usage of LLMs often involves guiding it towards the correct answer. So for knowledge workers- it is crucial to know what to do. LLMs/Copilots can take care of the how.

Using AI for knowledge work always comes with the risk of overreliance and lax evaluations (we humans are prey to something called the automation bias, where we give undue weightage to any decision taken by an automated system). This is why a large part of my work involves building rigorous evaluation pipelines, better transparency systems, and controlling for random variance for my clients. Without these teams can end up with an incomplete picture of their system- leading to catastrophically wrong decisions (cue AirCanada not testing their system and it offering refunds to people).

With all of that covered, let’s move on to the next section. How can we use AI to improve critical thinking and creativity? How can humans use AI effectively?

How to combine LLMs with people for better Creativity, Speed, and Collaboration

To answer this question, let’s first understand the biggest problems faced by a lot of teams- cognitive overload, knowledge fragmentation, and a lack of feedback.

When it comes to reducing cognitive overload, AI-based tools can be used for delegations, planning, and quick ‘load balancing’. Once again, the goal here isn’t to have AI do this perfectly, but for it to save time for users that would otherwise do this manually-

Next, let’s cover knowledge fragmentation. Large organizations have a lot of projects happening, and key people often leave due to turnover, promotions, or retirement. In this environment, keeping track of all that is happening and already done becomes impossible- and there is a lot of wasted effort reinventing the wheel.

“Knowledge fragmentation is a key issue for organizations. Organizational knowledge is distributed across files, notes, emails (Whittaker & Sidner 1992), chat messages, and more. Actions taken to generate, verify, and deliver knowledge often take place outside of knowledge 'deliverables’, such as reports, occurring instead in team spaces and inboxes (Lindley & Wilkins 2023). LLMs can draw on knowledge generated through, and stored within, different tools and formats, as and when the user needs it. Such interactions may tackle key challenges associated with fragmentation, by enabling users to focus on their activity rather than having to navigate tools and file stores, a behavior that can easily introduce distractions (see e.g., Bardram et al. 2019). However, extracting knowledge from communications raises implications for how organization members are made aware of what is being accessed, how it is being surfaced, and to whom. Additionally, people will need support in understanding how insights that are not explicitly shared with others could be inferred by ML systems (Lindley & Wilkins 2023). For instance, inferences about social networks or the workflow associated with a process could be made. People will need to learn how to interpret and evaluate such inferences”

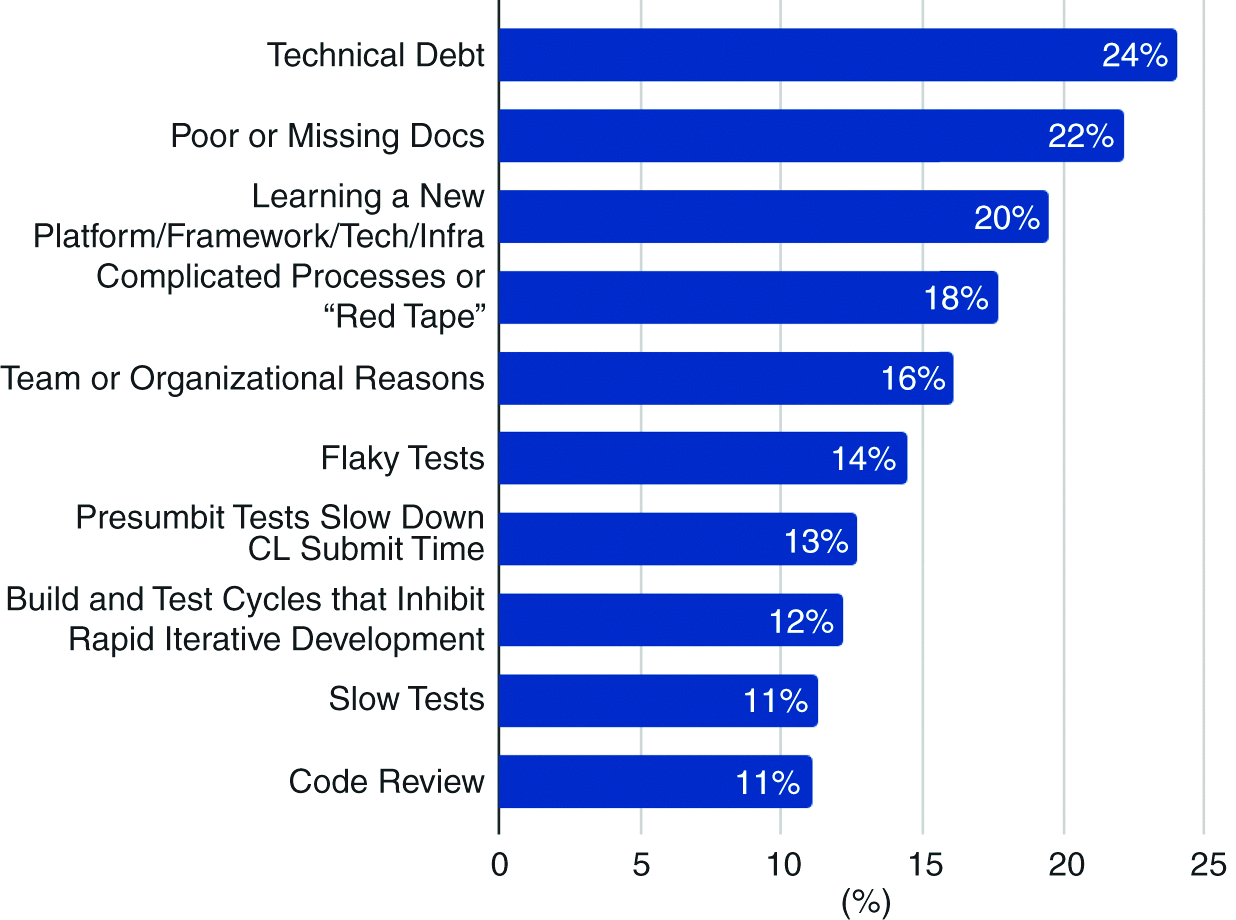

This is a theme we see in a few different studies. Google has an excellent publication into what software devs want from AI. Both the 2nd and 3rd reason mentioned below can be addressed (atleast partially) by using AI to aggregate insights across platforms and unify them into one place that people can refer to.

We covered that publication in-depth over here. The final section- which talks about concrete steps that orgs must take to fully unlock their AI potential will be relevant to you, even if you’re not an AI/Tech Company. For now, the simple takeaway is to encourage active documentation/logging so that your AI has plenty of data, and to invest heavily into AI systems that can interact with that Data in a useful manner.

We can summarize the main ideas in this section as follows-

Use AI to pull insights from various domains and present them coherently in one place. This will help address knowledge fragmentation, which will improve the collaboration within your team. This is key to improving the creativity of your organization.

Use AI to free up mental space by taking care of data-driven delegations and speeding up your planning. This will free up your management time to think about vision and other big-picture stuff.

Combine this with the usage of Copilot-like tools for knowledge workers, and you get something really powerful. Let’s end this with a discussion the implications and the future of work.

How AI can shape the future of work and society

As with any disruptive technology, AI will change not only how we do things, but also fundamentally what we do and what becomes important. We’re already seeing some of this. Slide 11 brings up an interesting possibility- where knowledge work may shift towards more analysis and critical integration as opposed to raw generation.

As opposed to a naked replacement that many people claim- I think that people will simply have to dedicate a lot more time to the evaluation. Checking outputs, sources, and the base analysis of the AI are all a must, and we’ll all probably spend a lot more time on that. Thus, there is a lot to be gained by investing in your skills for the same (or building tools there).

Similarly, soft skills and the general ability to push other people to get shit done would become even more important-

Skills not directly related to content production, such as leading, dealing

with critical social situations, navigating interpersonal trust issues, and

demonstrating emotional intelligence, may all be more valued in the

workplace (LinkedIn 2023)

With a powerful tool like AI, accessibility also becomes an important discussion point. There are two important dimensions of accessibility-

Reducing the barrier to entry for using AI, ensuring that everyone can benefit from these tools. This is something that organizations like OpenAI, Google, Meta, and the general Open Source community done very well. By bringing fire to the people, these organizations have put potential in everyone’s hand. And as much as I critique (and will continue to critique) these orgs, I think this contribution should be commended and admired.

To truly actualize the potential and bring change- we need to teach people how to best utilize these technologies. Presently this knowledge is locked behind expensive institutions, inaccessible research, and heaps of misinformation. To unlock the power that will come from democratizing AI, we require a much stronger grassroots effort. Otherwise, we will see the divide between the haves and have-nots grow since the former group will have the tools to massively boost their productivity while the latter will struggle to compete.

The second is critical, but much harder. Open-sourcing research/other important ideas in AI is my goal and the reason why my primary publication- AI Made Simple- doesn’t have any paywalls. However, that’s a very small part of what needs to be done. I have some ideas on what we can do to push things forward- but this is something that needs a lot open conversations from a lot of people. If you have any ideas/want to discuss things with me, shoot me a message and let’s talk. Once again, you can find my primary publication AI Made Simple over here, message me on LinkedIn, or reach out to me through any of my social media over here.

great piece

Dates underlies a systematic series of genocides eugenics and treason Acts against the United States and many other nations Microsoft has weaponized its windows 11 OS and all AI presently released is to be considered more lethal than any tactical nuclear weapon... Everyone involved in AI and supporting hates will likely at a minimum lose their shirts and at a maximum a company him to camp Delta which is Guantanamo Bay or Guam Marine Base where they will face military trial and capital punishment. The present future of AI is heavily undecided and the present software you see being labeled as AI is not AI it is weaponized software and if you're foolish enough to allow it to work against your business or personal life it will steal everything you own and you might just want to look in the mirror and ask yourself exactly how you think your benefiting from offering everything you own to Microsoft.