Innovation Secrets: Building Bell Labs-Level Innovation Teams

What allowed the world's most innovative team to build the Modern World

Hey there, I’m Devansh. I write for an audience of ~200K readers weekly. My goal is to help readers understand the most important ideas in AI and Tech from all important angles- social, economic, and technical. You can find my primary publication AI Made Simple over here, message me on LinkedIn, or reach out to me through any of my social media over here. I work as a consultant for clients looking to understand AI and Software and how they can use it to significantly boost productivity (or get insights in the industry), so if you have any questions, please reach out.

My article on the buiness and organizational incentives that pushed Scaling to become a dominant factor in AI seemed to really click with people (although that might’ve just been the Jose meme).

One of the most popular questions I received after that- especially from Managers/Leadership and Investors- was about the alternatives. How can we establish more high-functioning innovation teams that will do the kind of revolutionary work that establishes a new era?

To answer this question, let first ask you another one-

What is common between the C programming language, Solar Cells, the Laser, and the legendary operating system UNIX?

Take a second to think it over.

All of these were invented by people in one group, Bell Labs. And within the span of roughly 10 years. Talk about a 10-year challenge.

And if you think I’m cherry-picking a few examples, feel free to look into the company yourself. You’ll find out that this company has laid the foundations of basically every big accomplishment that created the modern world. 9 Different Nobel Prizes have been awarded for work done at Bell Labs. Let that number sink in. This was a group of people that made the PayPal Mafia look like a group of Children.

So, what was so special about the environment at Bell Labs? What was it that allowed the members to accomplish so many great things? I’ve talked to some people and done a bit of reading into this. In this article, I want to break down what I think set Bell Labs apart and allowed them to make soo many amazing contributions to science. By emulating them, we will be able to maximize the productivity/impact of our own own research groups (something I’m particularly interested in since I hope to lead my own Research Lab one day).

My focus for this article will be on leaders, managers, and policymakers who want to create environments that will enable the research, not on individual researchers who want to be more productive. We have other articles on personal productivity (and will cover more).

To understand what made Peak Bell Labs so cracked at cutting-edge research, it’s first important to understand why research(especially the kind that defines eras and puts you on science’s equivalent of pin-up calendars) is hard. This will contextualize our solutions better. And that is where we will begin our discussions-

Executive Highlights (TL;DR of the article)

Why Cutting-Edge Research is Expensive: A lot of people think about high scientist salaries or the costs of cutting-edge equipment as the primary reason why research can be expensive. And while that can be a lot, there’s also an overlooked reason why science at the edge can be so expensive- we don’t understand the edge. By definition, the cutting-edge is the least understood part of our knowledge systems, and we have lots of unknowns (both known unknowns and unknown unknowns). We have to build and solidify the shaky foundations of our knowledge as we go. Imagine how hard it would be to balance on a tight, narrow ledge if we had to simultaneously build it as we went on (and at times have to go back and build new paths or replace already built with a new setup). This is a lot like what research is like.

Understanding the Bell Labs set up- Bell Labs had a few quirks that gave them a leg up when it came to leading research: They hired a lot of (very smart) people, had a culture of collaboration that ensured that these people were working together, and they were a private company- so they didn’t have to worry about short-term targets. This allowed them to put money into long-term projects w/o worrying about short-term investors or quick returns. Bell Labs also had a lot of money to invest, which allowed them to fund experiments, a must given the high failure rate of cutting-edge research.

How you can replicate this- You can’t always copy-paste their entire setup. However, there are a few different things you can use to create a similar environment. This is a combination of-

Financial planning: have money in reserve and then allocate a certain percentage to the more experimental projects

Great culture (encourage initiative, don’t punish mistakes, and have open communication).

Forcing collaboration (especially in Remote Environments where people might not get the opportunity to interact with each other).

Building up a Robust Knowledge Base that allows you to work with various kinds of experts (you don’t have to be an expert in things yourself, but more knowledge will help you think about the impact of your work from different lenses AND is key to be able to interface with experts in different fields). Hiring people who have a multi-disciplinary bend is similarly key to ensuring that your lab members can interface with each other.

Loyalty to ensure people stay with your team for a long time, so they can run their experiments. If people are constantly leaving, then your teams will struggle to do consistent high-impact research.

I will summarize my conversations with various leaders in some of the most high-impact teams in tech that are able to balance research with quick business impacts (I figured this might be more interesting/relevant than the results expected from more pure research labs which have a longer time horizon). If that sounds interesting, let’s explore these ideas in greater detail.

Let’s start by exploring the difficulties inherent in research

Why Research is a Costly Process

When most people think about the costs of research, they focus on the obvious: expensive equipment, competitive salaries for PhD researchers, and costly materials. While these are significant, they're just the tip of the iceberg. The true challenges of cutting-edge research is not so much in the logistics but the nature of the field itself-

In actual research, you're not just solving a known problem - you're discovering what the real problems are as you go along. This creates a unique set of challenges that make research particularly demanding and expensive.

Recursive Uncertainty:

Each breakthrough typically reveals new layers of complexity we didn't know existed. Solutions often require solving prerequisite problems that we only discover along the way, and the path forward frequently requires backtracking and rebuilding our foundational understanding. In science, we’re all a bit like Jon Snow, who knows nothing. We trudge along, clinging to our limited knowledge, only to occasionally (or frequently if you’re me) learn that knowledge we were absolutely confident about is completely wrong.

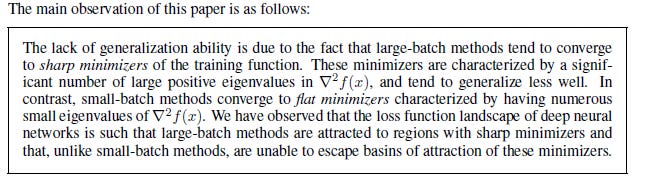

For an example close to home, think back to the research around Batch Sizes and their Impact in Deep Learning. For the longest time, we were convinced that Large Batches were worse for generalization- a phenomenon dubbed the Generalization Gap. The conversation seemed to be over with the publication of the paper- “On Large-Batch Training for Deep Learning: Generalization Gap and Sharp Minima” which came up with (and validated) a very solid hypothesis for why this Generalization Gap occurs.

numerical evidence that supports the view that large-batch methods tend to converge to sharp minimizers of the training and testing functions — and as is well known, sharp minima lead to poorer generalization. In contrast, small-batch methods consistently converge to flat minimizers, and our experiments support a commonly held view that this is due to the inherent noise in the gradient estimation.

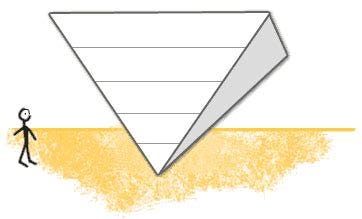

There is a lot stated here, so let’s take it step by step. The image below is an elegant depiction of the difference between sharp minima and flat minima.

Once you’ve understood the distinction, let’s understand the two (related) major claims that the authors validate:

Using a large batch size will create your agent to have a very sharp loss landscape. And this sharp loss landscape is what will drop the generalizing ability of the network.

Smaller batch sizes create flatter landscapes. This is due to the noise in gradient estimation.

The authors highlight this in the paper by stating the following:

They provided a bunch of evidence for it (check out the paper if you’re interested), creating what seemed like an open-and-shut matter.

However, things were not as clear as they seemed. Turns out that the Gap was the result of LB models getting fewer updates. If a model is using double the batch size, it will by definition go through the dataset with half the updates. If we account for this by using adapted training regime, the large batch size learners catch up to the smaller batch sizes-

This allows us to keep the efficiency of Large Batches and while not killing our generalization.

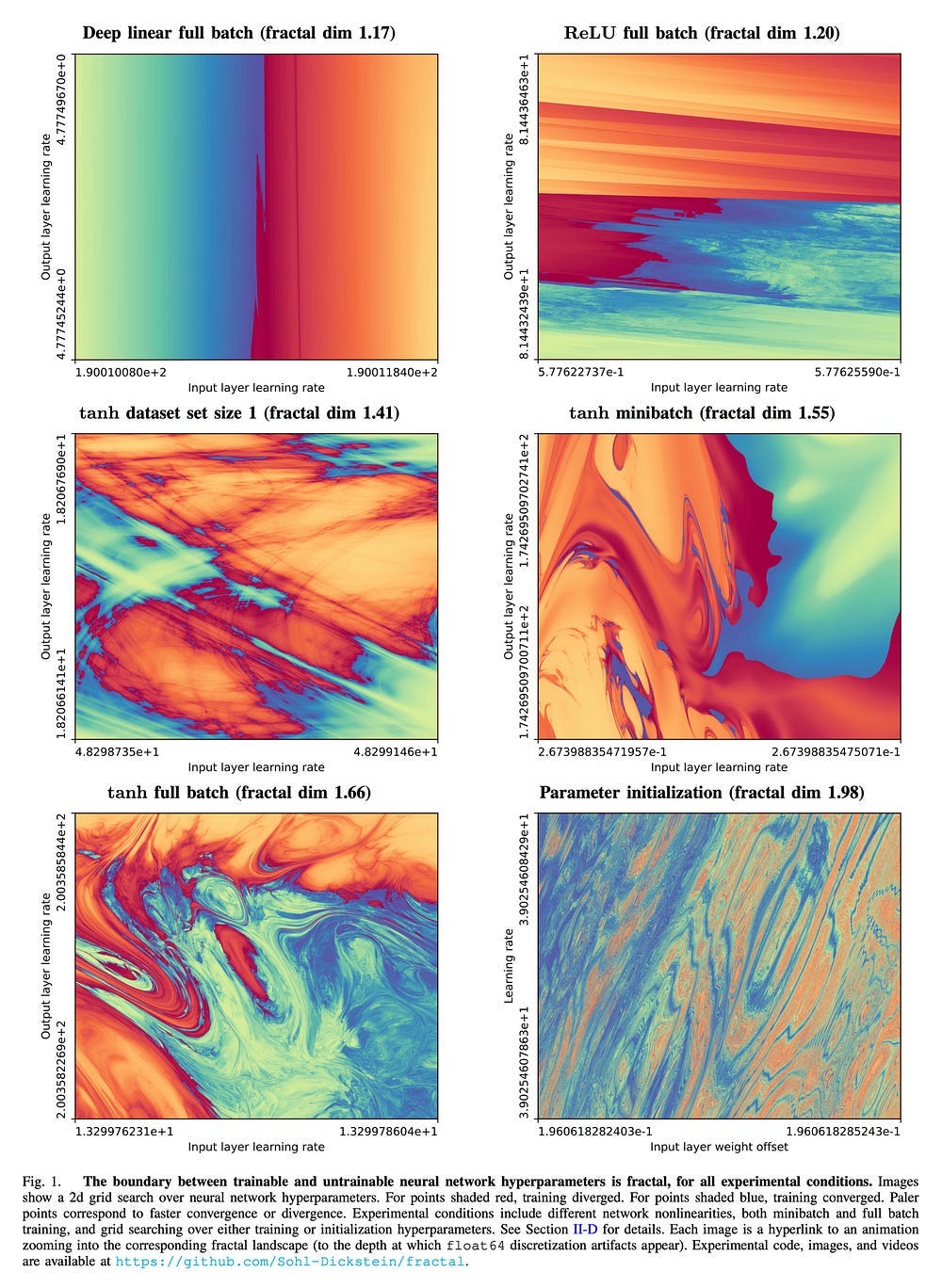

This happens a lot in Research, where learning something new only leaves us with more questions or makes us question what we thought we knew. For example, learning about yhe boundary between trainable and untrainable neural network hyperparameter configurations being fractal only left us with more questions about why this happens and what we can do with this-

Good research starts trying to answer questions but often leaves us with more questions than before. This can lead to a very costly tax-

The "Unknown Unknowns" Tax

In cutting-edge research, we often don't know what we don't know. This makes initial cost and time estimates inherently unreliable. Success criteria may shift as understanding evolves, and the most valuable discoveries sometimes come from unexpected directions.

This uncertainty creates a tax on research efforts - we need to maintain flexibility and reserve capacity to pursue unexpected directions, even though we can't predict in advance which directions will be most valuable. This is why 80-20’ing your way into scientific breakthroughs is so hard and why stories like Edison trying 1000 iterations of the bulb are common amongst inventors/scientists.

The Tooling Paradox

One of the most expensive aspects of cutting-edge research is that researchers often need to invent new tools to conduct their experiments. This creates a "meta-research" burden where significant time and resources go into creating the infrastructure needed even to begin the primary research.

Researchers frequently find themselves needing to create new measurement methods to validate their results and develop new frameworks to interpret their findings. This double layer of innovation - creating tools to create discoveries - adds substantial complexity and cost to the research process. If you’ve ever looked at LLM benchmarks, been frustrated at how badly they translate to your use-case, and created your own set of evals- then you know this pain well.

Non-Linear Progress Paths

Research doesn't follow a predictable linear path. Instead, it typically involves multiple parallel explorations, most of which won't pan out. Teams often pursue seemingly promising directions that end up being dead ends, while unexpected discoveries might require pivoting the entire research direction. Even progress often comes in bursts after long periods of apparent stagnation. This was one of the reasons that we identified for why Scaling was so appealing- it seemed to offer predictable returns in an otherwise unpredictable field.

Compared to software engineering, science requires very long cycles, which can include months of research before committing to an approach, and extensive analysis to understand the outcomes of experiments.

-Dr. Chris Walton, Senior Applied Science Manager at Amazon.

This non-linear nature makes it particularly challenging to manage and fund research effectively, as traditional metrics of progress don't capture the real value being created during exploratory phases.

Communication Overhead

Problems are inter-disciplinary, experts often are not (especially with the extreme specializations these days). Experts from different fields often lack a common vocabulary, and different disciplines have different methodological assumptions. Bridging these knowledge gaps requires significant time investment, and documentation needs are much higher than in single-discipline research.

These reasons, and many more, make research a uniquely difficult process. Misunderstanding/underestimating the challenges in this space often leads to organizations where scientists and management are at odds with each other (hence the stereotypical stories of the scientists with their heads in the clouds researching non-issues or the constant depiction of management that shuts down funding for a scientist before their big breakthrough).

So how did Bell Labs navigate this to create one of the most influential and impactful labs ever? Let’s take a second to understand that.

How Bell Labs Broke Research

Whjy was Bell Labs so good at Research?

I’m going to give you a short and long answer to this question.

Short Answer- they perfected the OG (I’m talking real OG) Venture Capital Model. They invested in very risky and experimental research over long periods of time in hopes of massive payouts. Most ventures could fail, but one era-defining win would be huge. The Dutch East India Company would be so proud-

Now for the long answer.

Bell Labs had the following aspects that allowed them to play the long game-

Reliable Money- Bell Labs had a lot of money. They had a monopoly over American Telecom, which gave them access to a lot of money. Money that would come in at regular intervals. This allowed them to do the following.

Aggressive Hiring- Bell Labs hired a lot of people. These were very smart people with advanced qualifications/degrees in all kinds of engineering/scientific disciplines. Getting all these smart people together was crucial to their success.

Collaboration- In an interview, Jon Gertner, the author of The Idea Factory: Bell Labs and the Great Age of American Innovation stated that a lot of the work at Bell Labs was by its nature collaborative. The company had an ‘open door policy’ that allowed the interactions between these smart people to blossom. This communication and collaboration between employees to really prosper.

Long-Term Thinking- Research at Bell Labs could take many years to complete. The leadership was willing to commit to such projects. Thus researchers could take their time building amazing projects. This is one of the things that sets them apart from current Big Tech, who are often pressured for short-term, quarterly returns by their investors (an idea we’ve talked about many times). For example, early versions of the Metaverse were skewered by the investors even though -

Meta as a company was still immensely profitable

The Metaverse was a great gamble to both build a stronger moat (against competitors) and reduce their reliance on Apple, Google, etc (Apple’s data changes for example cost Meta over $10 billion in sales revenue)

Would allow Meta to benefit further from both platform economics AND increase revenues by also getting into Hardware.

just b/c they didn’t see immediate returns (FYI, I wrote about Why the Metaverse was good business in Late 2022, when the hate was around the Peak).

Bell Labs was able to avoid this unnecessary pressure and continue to invest into research.

Combined, this set up an environment for great things to happen. The Bell Labs experiment was a lot like Ancelotti’s teams- you get great players, give them tactical freedom, and let the magic happen. As the Bell Labs experts interacted with each other, they were able to combine their skills to solve all kinds of problems. Researchers weren’t under the pressure of meeting short-term targets, which allowed them to focus on their craft and create excellent products.

Some companies have clearly been inspired by this. Google, for example, has X, the Moonshot Factory.

X is a diverse group of inventors and entrepreneurs who build and launch technologies that aim to improve the lives of millions, even billions, of people. Our goal: 10x impact on the world’s most intractable problems, not just 10% improvement. We approach projects that have the aspiration and riskiness of research with the speed and ambition of a startup.

So how can you learn from this? What do you need to do to ensure that you can foster an environment of excellence? How can you spawn high-performing teams throughout? Let’s cover that next.

Replicating the Bell Labs experiment

How can you create the next great group of inventors and innovators? Here are a few things that can help-

Have a war chest- Not everyone has the money of a government, Google, or a monopoly backing them. But this doesn’t mean you can’t replicate this effect on a smaller scale. Make sure your group of has a constant stream of revenue coming in. Allocate a certain percentage of these funds (10–20 is a good number) as the ‘moonshot budget’. If your group doesn’t have direct say over funds, allocating engineering/working time to tinkering around with moonshots is a good alternative. This has been the approach at SVAM. The consultants in our team are allocated a certain amount of “free learning time” where we play with different technologies that might be useful to future projects and present what we have learned in a weekly meeting. A core reason I was hired was to guide this research initiative (I read things and share insights that would help guide the other researchers). This has led to lots of great insights, many of which I have shared on this newsletter- such as our work on Deepfake Detection.

Cram Experts Together- If you can bring a lot of experts together, great things are bound to happen. Create group chats, meetups, and events where experts from various domains can meet and interact. Give them problems you’re interested in solving, and you will reap the rewards. I’ve covered this principle in my article on what it took to set up India’s 200 Billion Dollar Software Industry (get drunk with people of common interests).

Force Communication- I’m a huge fan of remote and async work. However, it makes the unplanned ‘watercooler talks’, which lead to many great discoveries, harder. As a leader/policy-maker, you should enforce this somehow. Make your reports go out and interact with people and see what they’re up to. A great way to do this is to make them read/talk to different people about how they solved various problems. One of my strongest recommendations would be to start a reading/discussion group for engineering blogs/research papers, which will allow your team to learn from others and develop better judgment. It will also give them an excuse to meet other people in your teams.

A good case-study for this is Fidel Rodriguez, who leads one of LinkedIn’s most important teams (Sales Analytics, deep-dive on him soon), and one his favorite techniques- a Gemba Walk. Imagine your team has built a feature. Before shipping it out, have a complete outsider ask you questions about it. They can ask you whatever they want. This will help you see the feature as an outsider would, which can be crucial in identifying improvements/hidden flaws. This is a good way to uncover cognitive blindspots that your team might have, and is a very natural way to get your team looking at problems from a multi-disciplinary perspectives. Something similar can be very impactful to ensure your researchers are not tunnel-visioned into one way of doing things.

Develop your knowledge- To be able to guide these teams, you will need to develop your own domain expertise. You don’t have to go super deep (you shouldn’t since your time is limited), but rather master the fundamental ideas enough to be able to interact with various experts and connect them with other resources. A good framework for this model was something I picked up from Muhammad Sajid, a senior leader at AWS Nordics- called the reverse pyramid. Here, you pick an area to go deep into (AI for me, Cloud for him) and go into the other ones with a lower intensity. This enables you to act as the glue that coordinates experts from different fields, enabling more multidisciplinary (and thus higher value) innovation.

Play the Long Game- You will have to eat a lot of losses and run into a lot of losses along the way. This is expected. In such cases, you must be willing to be like a Goldfish and forget about them (metaphorically). Take the L and Move on. Pro Tip- Document everything about your losses. This way others can learn from them and possibly improve on your processes. Use this article as a guide in creating great documentation.

Promote Initiative and autonomy- If you want an innovative group, you need a group of people willing to step up and take charge of different projects. You need to promote a lot of initiative in your teams. You will want to foster leaders amongst your group of experts who can guide the rest in your absence. On this note, it’s also important not to punish mistakes/failures too harshly. This will trigger the loss aversion in your teams, which will scare them away from their mission.

Applying these tips will be extremely helpful in taking the innovation and research in your groups to the next level. My biggest advice here would be to start small, somewhere close to home, and then get more ambitious as you proceed. This will allow you to minimize the added costs of research while building the culture slowly (culture is extremely important for research). If you have any thoughts, or are looking for help setting up research groups within your organization, give me a shout. Always excited to talk to you.

Thank you for reading and have a wonderful day.

Dev <3

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Wow! This is one of the most interesting articles I have read in s long time. Will have to re-read and back propagate my think a couple of times before full grasp. Reverting. Kudos and thanks.